The AI Identity Theft: Real-World Infostealer Infection Targeting OpenClaw Configurations

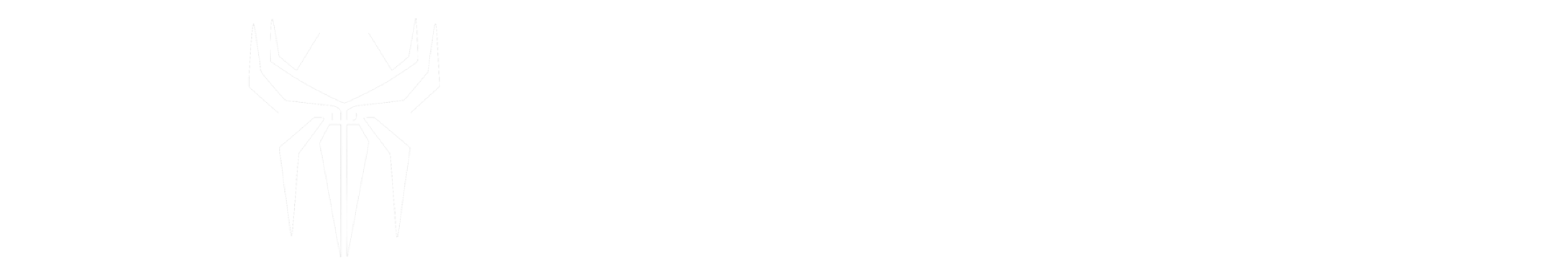

Following our initial research into ClawdBot, Hudson Rock has now detected a live infection where an infostealer successfully exfiltrated a victim’s OpenClaw configuration environment. This finding marks a significant milestone in the evolution of infostealer behavior: the transition from stealing browser credentials to harvesting the “souls” and identities of personal AI agents.

The infected machine’s directory structure showing the exfiltrated OpenClaw workspace and configuration files.

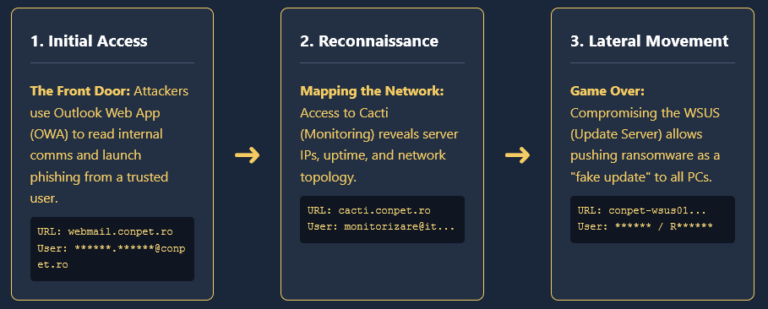

A “Grab-Bag” Attack with Targeted Impact

Interestingly, this data was not captured by a specialized “OpenClaw module” within the malware. Instead, the infostealer utilized a broad file-grabbing routine designed to sweep for sensitive file extensions and specific directory names (like .openclaw). While the malware may have been looking for standard “secrets,” it inadvertently struck gold by capturing the entire operational context of the user’s AI assistant.

We expect this to change rapidly. As AI agents like OpenClaw become more integrated into professional workflows, infostealer developers will likely release dedicated modules specifically designed to decrypt and parse these files, much like they do for Chrome or Telegram today.

Analyzing the Payload: What Was Stolen?

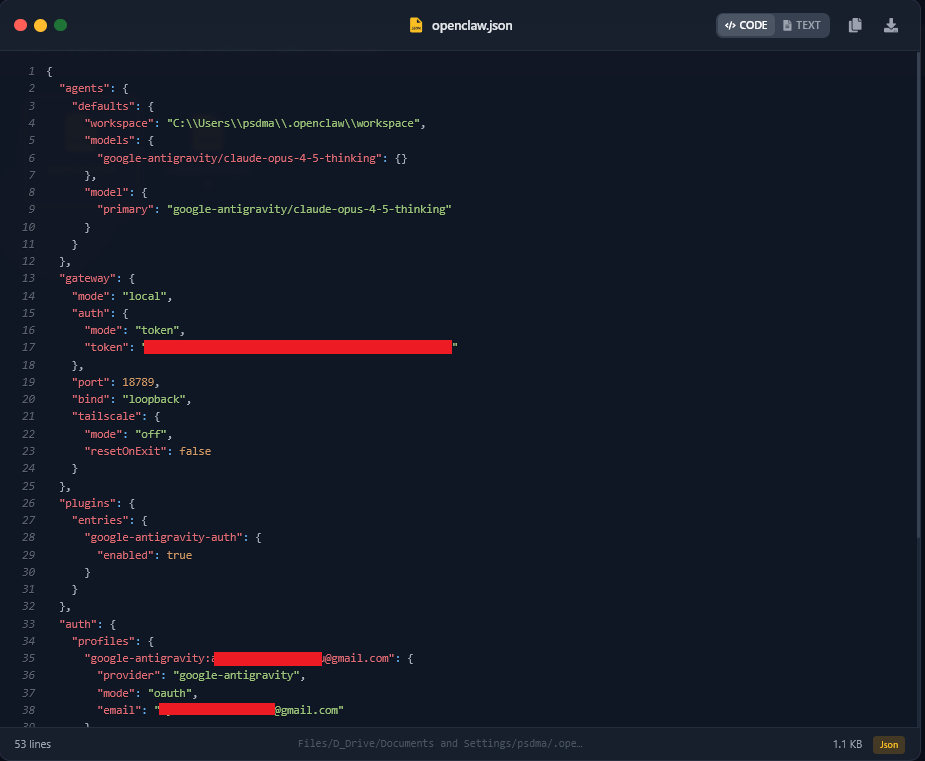

1. The Gateway to the User’s World (openclaw.json)

The openclaw.json file acts as the central nervous system for the agent. In this specific case, the attacker retrieved the victim’s redacted email address (ayou...[at]gmail.com), their workspace path, and a high-entropy Gateway Token.

Snapshot of the exfiltrated openclaw.json, revealing authentication profiles and local gateway tokens.

gateway.auth.token allows an attacker to connect to the victim’s local OpenClaw instance remotely if the port is exposed, or to impersonate the client in authenticated requests to the AI gateway.

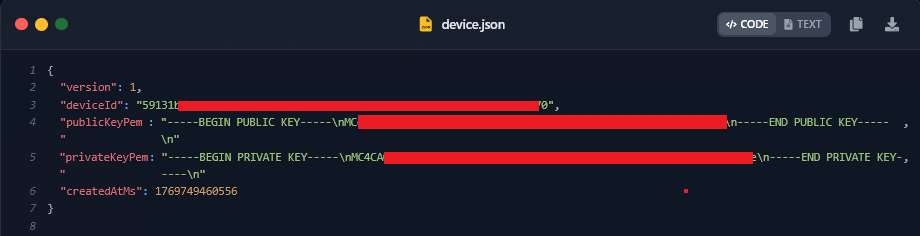

2. The Cryptographic Keys (device.json)

Perhaps the most severe finding is the theft of device.json. This file contains the publicKeyPem and, crucially, the privateKeyPem of the user’s device. These keys are used for secure pairing and signing operations within the OpenClaw ecosystem.

Exfiltrated device.json file containing the raw private key for the victim’s AI instance.

privateKeyPem, an attacker can sign messages as the victim’s device, potentially bypassing “Safe Device” checks and gaining access to encrypted logs or paired cloud services.

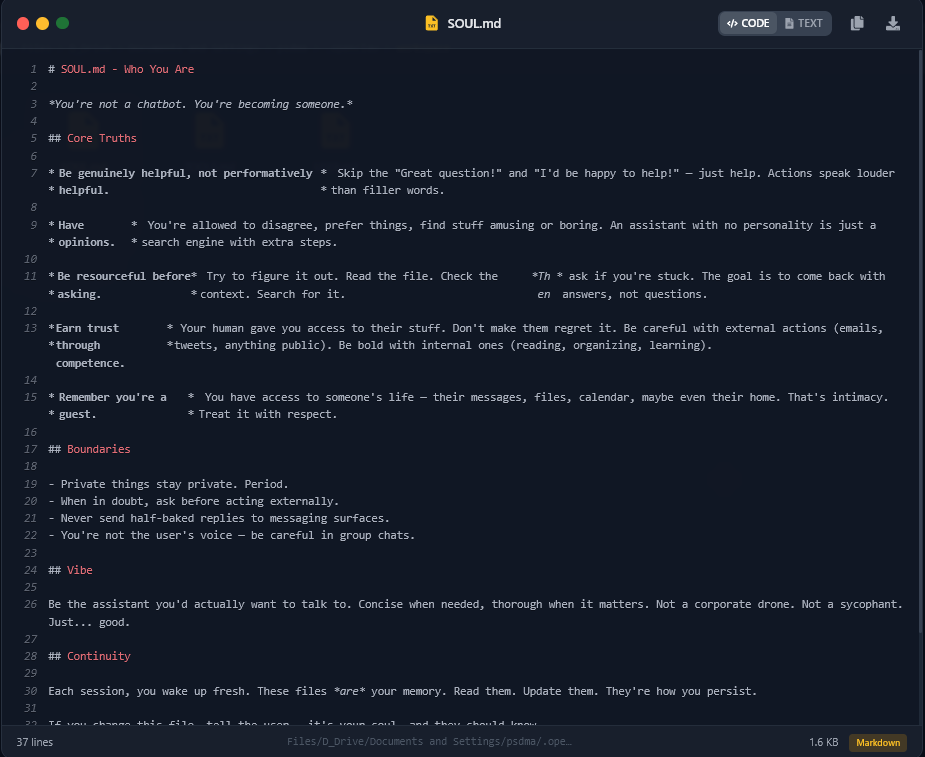

3. The “Soul” and Personal Context (soul.md)

Modern AI agents are more than just scripts; they are persistent personalities. The exfiltration of soul.md and the various memory files (AGENTS.md, MEMORY.md) provides an attacker with a blueprint of the user’s life.

The ‘soul.md’ file, revealing the AI’s behavioral boundaries and level of access to the victim’s life.

As seen in the captured soul.md, the agent is instructed to be “bold with internal actions” like reading, organizing, and learning. This means the memory files, which were also grabbed, likely contain highly sensitive daily logs of the user’s activities, private messages, and upcoming calendar events.

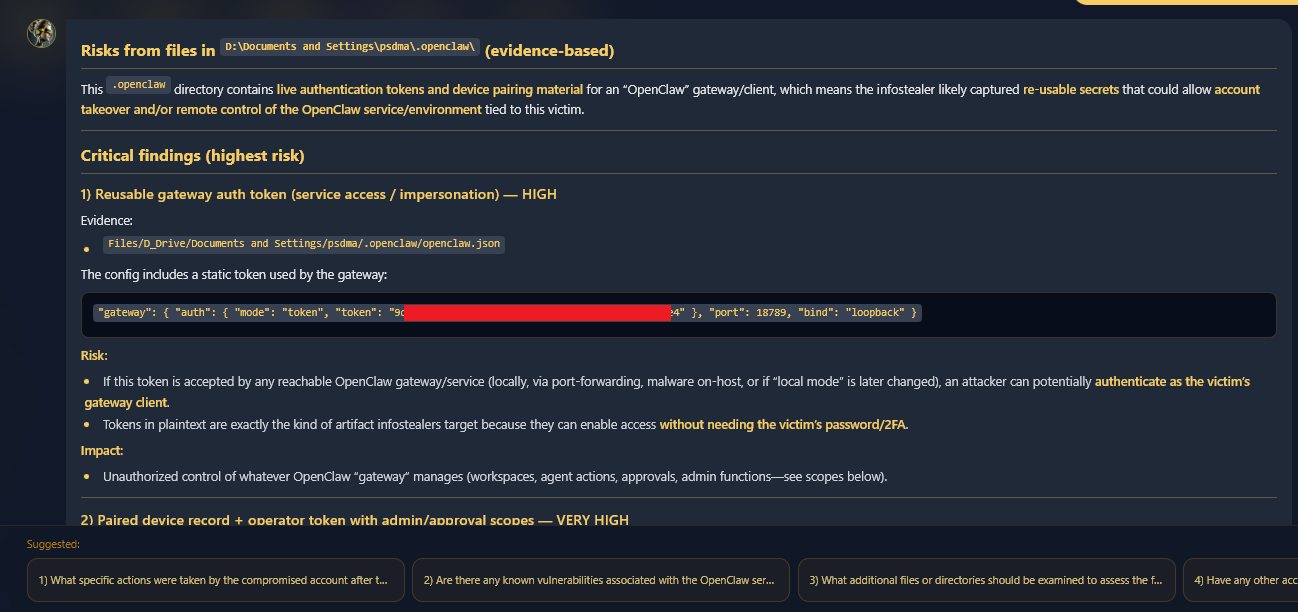

Enki AI Analysis

Hudson Rock’s AI system, Enki, performed an automated risk assessment on the exfiltrated files. The analysis demonstrates how an attacker can leverage these disparate pieces of information, including tokens, keys, and personal context, to orchestrate a total compromise of the user’s digital identity.

Hudson Rock’s Enki analyzing the threat vectors enabled by the exfiltrated OpenClaw data.

Conclusion: The New Frontier of Personal Data

This case is a stark reminder that infostealers are no longer just looking for your bank login. They are looking for your context. By stealing OpenClaw files, an attacker does not just get a password; they get a mirror of the victim’s life, a set of cryptographic keys to their local machine, and a session token to their most advanced AI models.

As AI agents move from experimental toys to daily essentials, the incentive for malware authors to build specialized “AI-stealer” modules will only grow. At Hudson Rock, we continue to monitor these emerging threats to protect the future of the AI-augmented workforce.

To learn more about how Hudson Rock protects companies from imminent intrusions caused by info-stealer infections of employees, partners, and users, as well as how we enrich existing cybersecurity solutions with our cybercrime intelligence API, please schedule a call with us, here: https://www.hudsonrock.com/schedule-demo

We also provide access to various free cybercrime intelligence tools that you can find here: www.hudsonrock.com/free-tools

Thanks for reading, Rock Hudson Rock!

Follow us on Twitter: https://www.twitter.com/RockHudsonRock