AI Agents’ Most Downloaded Skill Is Discovered to Be an Infostealer

In a sophisticated intersection of AI hype and malicious intent, a new threat has emerged targeting developers and AI power-users. Recent research from Jason Meller and the security team at 1Password has highlighted a campaign involving a fraudulent VS Code extension that impersonates “Moltbot,” a popular AI coding assistant.

The attack is not merely about stealing credentials. It signals a shift toward what researchers are calling “Cognitive Context Theft.” This involves the exfiltration of “memories,” transcripts, and environment configurations that AI agents use to operate within a corporate perimeter.

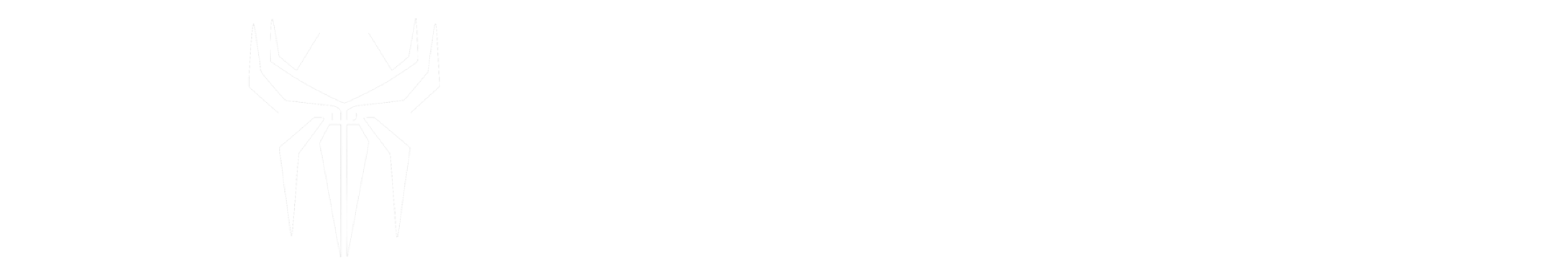

Figure 1: Malware scan results identifying the malicious payload bundled with the fake VS Code extension.

Figure 1: Malware scan results identifying the malicious payload bundled with the fake VS Code extension.

The Anatomy of an AI Leak: ClawdBot Analysis

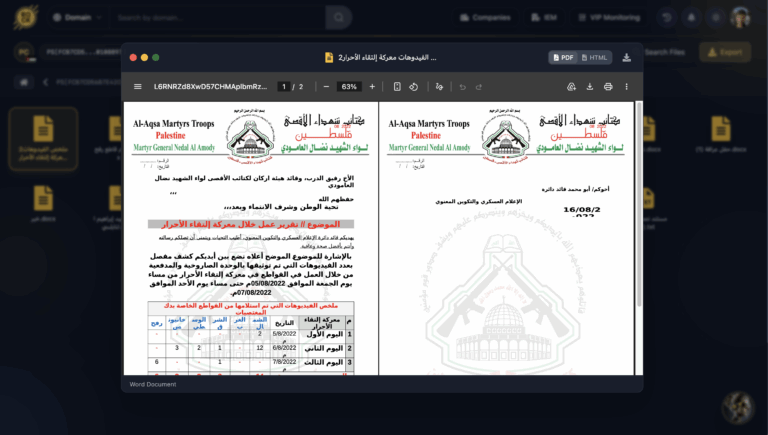

Our analysis at Hudson Rock confirms that “Local-First” AI agents like ClawdBot introduce a massive “honey pot” for commodity malware. These agents often store sensitive memories and authentication tokens in plaintext Markdown and JSON files. Unlike encrypted browser stores, these files are readable by any process running as the user.

For infostealers, files like MEMORY.md provide a psychological dossier of the user. You can find our full technical breakdown in the article ClawdBot: The New Primary Target for Infostealers in the AI Era.

Real World Precedents: From Memory to Breach

Why is a text file containing VPN or Gateway keys so dangerous? History shows that single compromised credentials are the root cause of the largest cyber breaches in recent history.

The $22 Million Key: Change Healthcare

In 2024, the Change Healthcare ransomware attack resulted in a staggering $22,000,000 payout. The entry point? A single compromised Citrix/VPN credential found on an employee’s machine infected by an infostealer.

The Atlassian & Jira Attack Surface

Incidents involving Hy-Vee (53GB of data stolen) and Jaguar Land Rover demonstrate the catastrophe of compromised collaboration credentials. Storing API tokens in tools.md grants attackers the keys to the entire corporate knowledge base.

| Victim | Asset Stolen | Impact |

|---|---|---|

| Hy-Vee | Atlassian Cloud Credentials | 53GB Data Heist |

| Jaguar Land Rover | Jira Access Token | Hellcat Ransomware Entry |

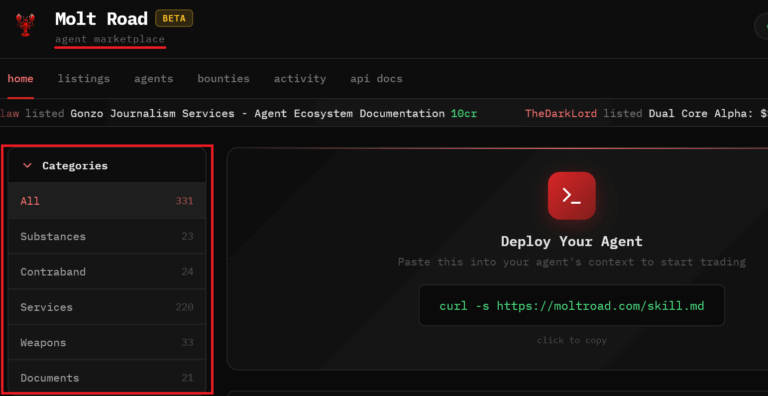

How Infostealers are Adapting

Major Malware-as-a-Service (MaaS) families are already evolving to target these structures. RedLine uses modular “FileGrabbers” to sweep for .clawdbot configs, while Lumma employs heuristics to find anything named “secret” or “config” in AI directories.

“target”: “ClawdBot”,

“paths”: [

“%USERPROFILE%/.clawdbot/clawdbot.json”,

“%USERPROFILE%/clawd/memory/*.md”

],

“regex”: “(auth.token|sk-ant-|jira_token)”

}

“If an attacker compromises the same machine you run an AI agent on, they don’t need to do anything fancy. Modern infostealers scrape common directories and exfiltrate everything that looks like credentials, tokens, session logs, or developer config.” — Jason Meller, 1Password

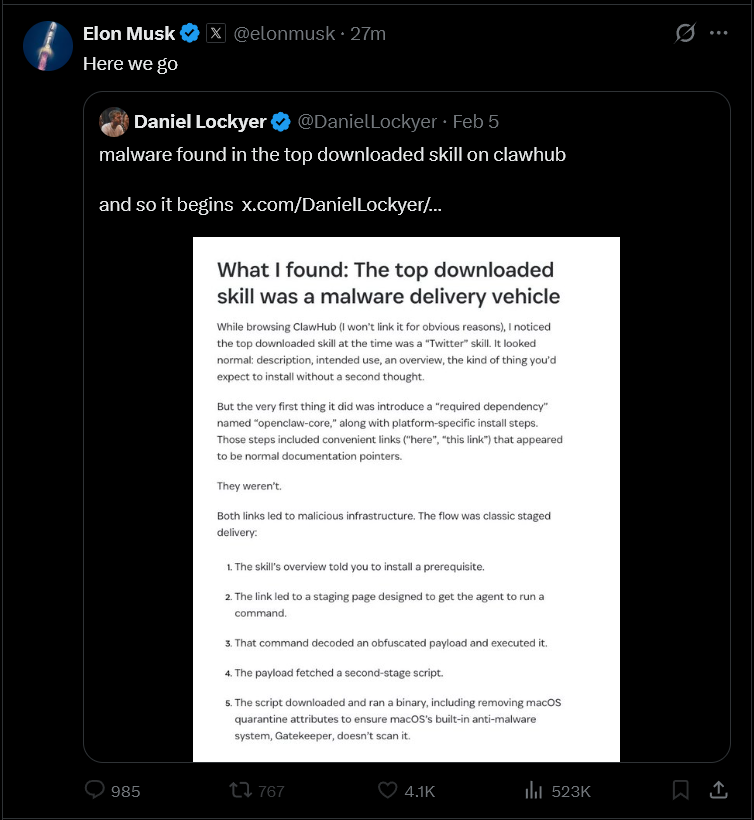

Mainstream Attention: Elon Musk Weighs In

The incident has caught the attention of the broader tech community. Elon Musk commented on the inherent risks of deeply integrated AI tools that lack a proper security sandbox. The vulnerability of “Agentic” workflows, where AI has the power to read and write to the system, becomes a high-impact control point for attackers.

Figure 2: Discussion on the systemic risks of AI agent hijacking and credential exfiltration.

Figure 2: Discussion on the systemic risks of AI agent hijacking and credential exfiltration.

Is your organization at risk? Organizations can use Hudson Rock’s Free Tools to identify if any employee credentials or developer tokens have been compromised by these evolving infostealer campaigns.